"Nothing to see here": No structural brain differences as a function of the Big Five personality traits from a systematic review and meta-analysis. Yen-Wen Chen, Turhan Canli. Personality Neuroscience, Volume 5, Aug 9 2022. https://www.cambridge.org/core/journals/personality-neuroscience/article/nothing-to-see-here-no-structural-brain-differences-as-a-function-of-the-big-five-personality-traits-from-a-systematic-review-and-metaanalysis/BD74C86346A7C3B65E255FA9F1C6D797

Abstract: Personality reflects social, affective, and cognitive predispositions that emerge from genetic and environmental influences. Contemporary personality theories conceptualize a Big Five Model of personality based on the traits of neuroticism, extraversion, agreeableness, conscientiousness, and openness to experience. Starting around the turn of the millennium, neuroimaging studies began to investigate functional and structural brain features associated with these traits. Here, we present the first study to systematically evaluate the entire published literature of the association between the Big Five traits and three different measures of brain structure. Qualitative results were highly heterogeneous, and a quantitative meta-analysis did not produce any replicable results. The present study provides a comprehensive evaluation of the literature and its limitations, including sample heterogeneity, Big Five personality instruments, structural image data acquisition, processing, and analytic strategies, and the heterogeneous nature of personality and brain structures. We propose to rethink the biological basis of personality traits and identify ways in which the field of personality neuroscience can be strengthened in its methodological rigor and replicability.

3. Discussion

MRI studies have come under criticism for reporting under-powered and non-replicable findings (Button et al., 2013; Szucs & Ioannidis, 2017). Here, we used a systematic review and a meta-analysis approach to discern which findings, if any, would replicate reported associations between personality and brain measures. Surprisingly, we found no evidence for robust associations between any of the Big Five traits and brain structural indices (i.e., GMV, CT, and SA). Although we observed some consistent results from the qualitative systematic review, these findings failed confirmation when we used a quantitative meta-analytic approach.

3.1. Comparison with previous systematic review and/or meta-analysis studies

To our knowledge, only three studies used a systematic review and/or quantitative meta-analytic approach to evaluate the replicability of associations between personality traits and brain structural indices. One meta-analysis (Mincic, 2015) examined the association between GMV and a broad composite meta-trait named “negative emotionality” and included studies that measured one of these traits: Behavioral inhibition, harm avoidance, trait anxiety, or neuroticism.

Two other studies restricted their analyses to single “Big Five” traits only. One review by Montag, Reuter and colleague (2013) focused on GMV and neuroticism. These investigators reported heterogeneous findings across studies but noted consistent negative associations with neuroticism in prefrontal regions that included SFG and MFG and the OFC. This observation is consistent with our systematic review. However, Montag and colleagues did not subject their reviewed studies to a quantitative meta-analysis to determine the robustness of this observation, whereas our meta-analysis failed to confirm this observed association.

The second study was conducted by Lai et al. (2019) to examine the association between GMV and extraversion, using both a systematic review and meta-analytic approach. Based on quantitative meta-analysis, these investigators reported positive associations in the medOF and PRC, and negative associations in PHC, SMG, ANG, and MFG. The results contradicted our null meta-analysis result for extraversion. The discrepancies might derive from, first, the difference in the studies that were included across the two meta-analyses. First, Lai et al. (2019) only included VBM studies, whereas the present study included both VBM (n = 14) and SBM (n = 4) studies. Thus, four studies using SBM were not included in Lai et al. (2019). Second, Grodin and White (2015) were excluded from the present study due to the personality instrument this study used. This study used two subscales (Social Potency and Social Closeness) from the Multidimensional Personality Questionnaire Brief Form as proxy of extraversion. However, we determined that this instrument does not align with the same conceptual structure of the global extraversion of the Big Five and therefore excluded this study. Third, two studies that used different image processing were by DeYoung et al. (2010) (which did not perform segmentation in the preprocessing) and by Yasuno et al. (2017) (which used a T1w/T2w ratio signal), and were not included in Lai et al. (2019). In addition, Nostro et al. (2017), which was included in Lai et al. (2019), were excluded from the present study due to non-independent samples overlapping with another larger study (Owens et al., 2019). The second difference between the present study and Lai et al. (2019) is the meta-analytic software versions used. Lai et al. (2019) used the previous version of SDM, Anisotropy Effect-Size Seed-based d Mapping (AES-SDM) (Radua et al., 2012, 2014), whereas the present study used the latest version, SDM-PSI. The major improvement of SDM-PSI is the implementation of multiple imputations of study images to avoid the bias from the single imputation and a less biased estimation of population effect size, and it is considered more robust than AES-SDM (Albajes-Eizagirre, Solanes, Vieta, et al., 2019).

3.2. Possible explanations of heterogeneous findings

Several possible explanations may account for discrepancies across the studies, including, but not limited to, (1) sample heterogeneity, (2) Big Five personality instruments, (3) structural image data acquisition, processing, and analytic strategies, (4) statistical approach and statistical significance threshold, and (5) the heterogeneous nature of personality and brain structure. The following sections discuss the above-listed factors in greater detail.

3.2.1. Sample heterogeneity

Sample characteristics that potentially contribute to highly heterogeneous results across the literature include mean age and age range, sex, and the inclusion of patient cohorts and different levels of personality traits across samples.

3.2.1.1. Age

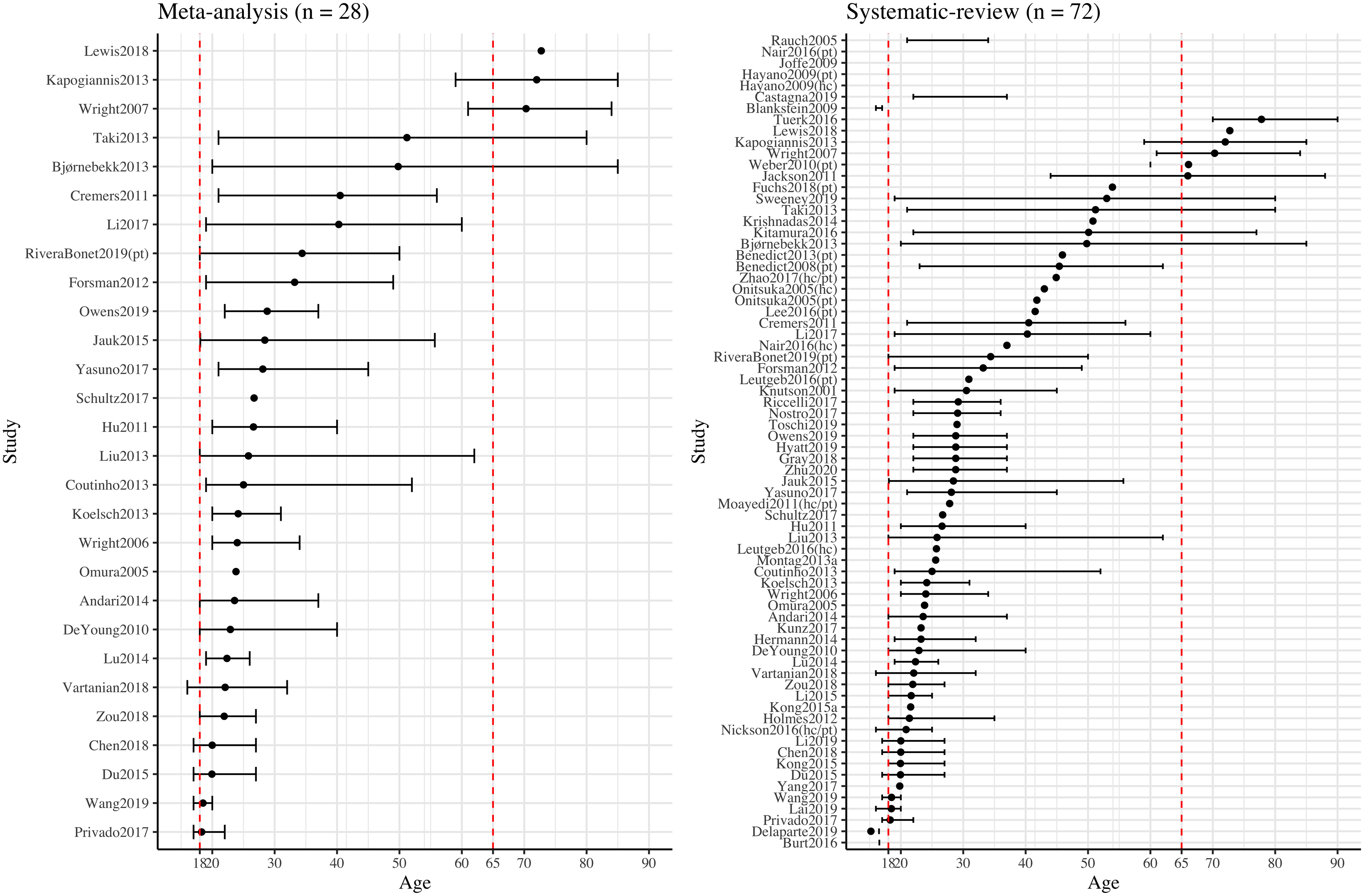

From the systematic review, age correlated negatively with neuroticism, extraversion, and openness, but positively with agreeableness (note that not all studies that examined association between age and personality traits reported significant associations, as shown in Table S18). Those qualitative observations are consistent with previous population-based cross-sectional and mean-level studies (Allemand et al., 2007; Donnellan & Lucas, 2008). We examined whether age could account for heterogeneous meta-analysis results for all five traits using meta-regression, but we did not observe any significant age effect on any of the meta-analyses for five traits. However, the lack of a significant effect may be due, in part, to a narrow age range across the samples, which mainly consisted of adults aged 18 – 40 (Figure 4). This may hinder the generalizability of the results. Only one study (Nickson et al., 2016) examined longitudinal changes of personality traits and two studies (Nickson et al., 2016; Taki et al., 2013) examined longitudinal changes of GMV. Nickson et al. (2016) observed no association between AMY GMV changes and neuroticism and extraversion changes over an average of two-year interval among a mixed sample of patients with major depressive disorder and healthy controls. However, considering the small-to-moderate sample size and heterogeneous composition of the sample, future research with longitudinal study design is required to explore the causal relationship between age, personality traits, and brain structures. Furthermore, none of the included studies considered non-linear associations between age and personality traits, despite evidence in support of such a relation (Donnellan & Lucas, 2008; Terracciano et al., 2005). For example, a curvilinear association was reported between age and conscientiousness, such that the highest scores were observed in middle adulthood (Donnellan & Lucas, 2008). Future research with the consideration of non-linear nature of age and personality traits is required to delineate the relationship.

Figure 4. Sample Mean Age and Age Range Distribution of Studies Included in the Systematic Review and Meta-analysis across Big Five Personality Traits and Three Brain Indices. Note. The study (y-axis) was ordered by the mean age (dot) from each study. Studies were separately labeled as “(hc)/(pt)/(hc/pt)” indicating results from the given study were reported separately for healthy and patient groups or combining healthy and patient groups. Not all studies provided the information for mean age or age range, thus, data from those studies were presented incompletely or not presented. Two red dashed vertical lines indicating the age of 18 and 65

3.2.1.2. Sex

From our systematic review, females reported higher levels of personality measures across all five traits, with the exception of extraversion from Omura et al. (2005) and openness from Gray et al. (2018) (note that not all studies that examined sex difference in personality traits reported significant difference, as shown in Table S19), and this observation is consistent with a previous population-based cross-sectional study (Soto et al., 2011). Interestingly, we observed that the mean ages of the participants were younger in Omura et al. (2005) and Gray et al. (2018), compared to other studies that reported higher levels of extraversion and openness in females. This observation is also partially consistent with the observations from Soto et al. (2011), which showed that, on average, sex differences in personality traits vary across difference ages. Among all included studies in the systematic review (across five traits and three brain indices), 14 studies examined sex-dependent associations between the Big Five and brain structure. Two main approaches were used, including conducting trait–brain analysis separately for females and males and conducting sex-by-trait interaction analysis. As summarized in the previous section, the results are inconsistent across those studies, such that some studies reported trait–brain associations only in females (Hu et al., 2011), only in males (Hu et al., 2011; Montag, Eichner, et al., 2013; Nostro et al., 2017), or in neither group (Knutson et al., 2001; Liu et al., 2013; Wright et al., 2006, 2007), and interaction analyses produced similarly conflicting results ((Blankstein et al., 2009; Cremers et al., 2011; Sweeney et al., 2019) versus (Bjørnebekk et al., 2013; J. C. Gray et al., 2018; Lewis et al., 2018; Wang et al., 2019)). We then examined whether sex (using the proportion of females) could account for heterogeneous meta-analysis results for all five traits using meta-regression, but again we did not observe significant effects. The potential explanations for sex difference include sex-related hormonal variability (De Vries, 2004), early biological and social developmental trajectory (Blankstein et al., 2009; Goldstein et al., 2001), and social processing differences in response to the environment (Wager et al., 2003). However, due to the mixed results from the literature, future research should take sex difference into account when examining the association between personality traits and brain structures.

3.2.1.3. Inclusion of patient cohorts and different levels of personality traits across samples

From the systematic review studies (across five traits and three brain indices), 14 studies included patient cohorts, as summarized in Table S17. Most studies reported higher mean level of neuroticism and lower mean level of extraversion and conscientiousness in patient cohorts, compared to healthy individuals (note that not all studies reported group differences in these three traits, as shown in Table S17), whereas no mean-level differences were reported for agreeableness and openness. Among those 14 patient cohort studies, three studies reported group differences in trait–brain associations, noting opposite associations between patients and healthy participants. It is possible that different levels of personality traits between patient and healthy groups contribute to the conflicting trait–brain associations. For example, a higher mean level of neuroticism was observed among patients with alcohol use disorder, compared to healthy participants (Zhao et al., 2017). However, no group mean-level difference was observed in Nair et al. (2016) and Moayedi et al. (2011). Alternative explanations include symptoms associated with the given medical or psychiatric conditions and brain structural differences underlying those conditions. To remove the potential effect from patient cohorts for meta-analysis, we conducted a sub-group meta-analysis excluding patient cohort studies and the result from neuroticism (this is the only trait that with patient cohort study in meta-analysis) remained unchanged.

Considering the potential influence of levels of personality traits across studies among non-patient studies, we compared the mean scores of personality trait measures among systematic review studies that reported contradictory associations. For example, two included studies reported associations in opposite directions between openness and PCC GMV, with higher mean level of openness reported in Yasuno et al. (2017) compared to Kitamura et al. (2016). On the other hand, another two studies that reported associations in opposite directions between extraversion and MFG GMV reported comparable mean-level extraversion scores (Blankstein et al., 2009; Coutinho et al., 2013). However, due to differences in personality trait instruments and scoring methods (e.g., some studies reported raw score, whereas some studies reported T scores), lacking reporting of personality scores in some studies, it is difficult to determine whether the levels of personality traits might play a role in conflicting results we observed from the included studies.

3.2.2. Heterogeneity in big five trait instruments

The use of different personality trait instruments might contribute to discrepancies across included studies. The most commonly used instruments were the Neuroticism, Extraversion, Openness Personality Inventory – Revised (NEO-PI-R) and the NEO-Five-Factor Inventory (NEO-FFI, short version of NEO-PI-R). Other instruments included the International Personality Item Pool (IPIP), Eysenck Personality Questionnaire (EPQ), Big Five Inventory (BFI), Big Five Structure Inventory (BFSI), Big Five Aspects Scale (BFAS), and 16 Personality Factor test (16 PF). Although studies showed high correlations between different instruments, some trait scales showed only low-to-moderate correlations (Gow et al., 2005). We examined whether the use of different instruments (NEO versus non-NEO) could account for heterogeneous meta-analysis results using sub-group analysis with only studies using NEO (either NEO-PI-R or NEO-FFI), but we did not observe significant results for all five traits. In addition, all the instruments listed were self-report questionnaires. Studies suggested combined observation- and interview-based, informant report (Connolly et al., 2007; Hyatt et al., 2019) or to use physiological responses (Taib et al., 2020) to better capture the complex construct of personality and avoid the bias from self-report.

3.2.3. Heterogeneity in structural image data acquisition, processing, and analytic strategies

The heterogeneities of the structural image data acquisition, (pre)processing, and analytic strategies may also have contributed to discrepancies across studies.

3.2.3.1. Structural image data acquisition and processing

The use of different MRI scanners, scanner magnetic field strength, voxel size, and smoothing kernel could result in differences in image spatial resolution and signal-to-noise ratio. In addition, the use of VBM versus SBM processing methods might lead to inconsistent results. Although Kunz et al. (2017) reported highly correlated total GMV results between VBM and SBM processing methods, none of the included studies directly compared VBM and SBM for regional structural results. However, the small number (18 VBM and 5 SBM studies across five traits) of studies we could include in the meta-regression limits any strong conclusions.

3.2.3.2. Structural image data analytic approaches

Different levels of structural analysis, whole-brain versus region-of-interest (ROI), could contribute to the heterogeneous results. Note that only studies using whole-brain voxel-/vertex-wise analysis and the same threshold across the whole brain were included in the meta-analysis to avoid the bias derived from regions with more liberal threshold (i.e., a prior ROI analysis) (Albajes-Eizagirre, Solanes, Fullana, et al., 2019; Q. Li et al., 2020), which makes a direct comparison between whole-brain and ROI studies difficult. Li et al. (2017) made a direct comparison between whole-brain vertex-wise and whole-brain regional parcellation-based analyses and reported inconsistent results (i.e., different traits were associated with different brain indices in different regions) from the same group of participants. The authors suggested that the two approaches provide different advantages, such that vertex-wise approach could potentially give more accurate localizations, whereas parcellation-based approach could potentially achieve higher test-retest reliability across populations/studies. Furthermore, the selection of the atlas to label brain region for a given peak coordinates (for whole-brain voxel-/vertex-wise studies) or to extract mean value for pre-defined ROIs (for ROI studies) adds another layer of heterogeneity, as the same voxel/vertex coordinates may be labeled differently across atlases. Atlas used by the included studies can be found in Supplementary Tables S2 - S16. Future research should utilize alternative approaches, such as voxel-/vertex-based and parcellation-based approaches, to evaluate the reliability of the results.

The present study was limited to studies examining brain structure using T1-weighted structural MRI. Alternatively, brain structure can be measured by diffusion MRI. By measuring diffusivity of the water molecules, diffusion imaging allows an indirect way to measure white matter fiber structure (Mori & Zhang, 2006) and it has been implemented in the field of personality neuroscience (e.g., Avinun et al., 2020; Bjørnebekk et al., 2013; Privado et al., 2017; Ueda et al., 2018; Xu & Potenza, 2012). Furthermore, beyond single voxel/vertex and single parcellated region, connectome and network approaches may offer promising alternative ways to investigate patterns of brain structures and their associations with personality (Markett et al., 2018). Network approaches not only measure characteristics of nodes (brain regions) and edges (connections between brain regions) within and between the brain networks but also measure the local and global organization of the brain networks (Sporns & Zwi, 2004). An optimal connectome approach may be achieved by implementing both high-resolution structural and diffusion MRI images (Gong et al., 2012; Sporns et al., 2005).

3.2.4. Heterogeneity in statistical approach and statistical significance threshold

The use of different statistical approaches and statistical significance thresholds might contribute to discrepancies across studies.

3.2.4.1. Covariates in model specification

For model specification, commonly used covariates include age, sex, and global brain indices. Among the included studies in the systematic review (across five traits and three brain indices), covariates included age (n = 55 studies), sex (n = 47), TGMV/mean CT (n = 13), total brain volume (TBV) (n = 9), intracranial volume (ICV) (n = 26). Other covariates included intelligence (n = 7) and education (n = 3). Studies have directly examined influence of the covariates and suggested that the associations between personality traits and brain structures change dramatically (Hu et al., 2011; Hyatt et al., 2020). For example, Hu et al. (2011) reported different trait–GMV associations when controlling for different combinations of age, sex, and TGMV, and Hyatt et al. (2020) reported remarked changes from the inclusion of ICV as covariate in statistical significance of the relation between various psychological variables (e.g., personality, psychopathology, cognitive processing) and regional GMV. In addition to demographic covariates, some studies also controlled for other personality traits (n = 15 across five traits among systematic review studies). Statistically speaking, the “unique association” of a given trait by including the other traits as covariates seemed to be reasonable, but whether the inclusion of other traits as covariates is still under debate, as some studies argued that the interpretation of “partial association” might not be straightforward (Lynam et al., 2006; Sleep et al., 2017) and might fail to capture the inter-correlations between personality traits (e.g., Gray et al., 2018; Holmes et al., 2012; Liu et al., 2013). Profile- or cluster-based approaches have been proposed as an alternative way to capture the inter-dependency of personality traits (e.g., Gerlach et al., 2018; Y. Li et al., 2020; Mulders et al., 2018). To assess whether the inclusion of different covariates could account for heterogeneous meta-analysis results, we conducted a series of meta-regression analyses with the inclusion of (1) ICV, (2) TGMV, (3) any global brain indices (ICV, TGMV, or TBV), (4) other personality traits as covariates, and (5) the total number of covariates. Our results did not change as a function of any of these variables, although this may also reflect the small number of studies that fulfilled relevant selection criteria. We suggest that future research and future synthesis work should take the inclusion of covariates into account.

3.2.4.2. Significance threshold and multiple comparison correction

The choice of statistical significance threshold for reporting results should also be considered. Various levels of threshold were used among the included studies, including uncorrected versus corrected for multiple comparison, voxel-/vertex-level versus cluster-level correction, and different multiple comparison correction methods (e.g., family-wise error, Monte Carlo simulated, non-stationary, false discovery rate). Meta-analytic null results from this study may be due, in part, to positive results from studies that applied liberal statistical thresholds to data with small effect sizes, which were not robust enough to be replicable.

3.2.5. Heterogeneous nature of personality and brain structure

3.2.5.1. Replication challenges

Direct replication efforts in studies of personality and brain structure remain scarce to date. Replication was directly assessed in Owens et al. (2019) by comparing results with their earlier study (Riccelli et al., 2017), using data from the same dataset (i.e., HCP). Owens et al. (2019) demonstrated that not all results were replicated from the replication sample or the full sample. The sample characteristics, personality and image data acquisition, and processing were almost identical across those two studies, suggesting that other explanations than differences in sample characteristic and methodologies should be considered, according to Owens et al. (2019).

3.2.5.2. Heterogeneous nature and individual difference

Finally, we consider the complex and heterogeneous nature of both personality and brain structures. First, a large number of brain regions were reported to be associated with one or more personality traits, and this observation might suggest that personality is constructed by many small effects from different brain regions (M. Li et al., 2019; Montag, Reuter, et al., 2013; Owens et al., 2019). Second, most conclusions from the literature were drawn from group mean levels, which ignored individual differences. Studies have demonstrated the influences of individual differences on cross-sectional and longitudinal changes in personality traits (Allemand et al., 2007; Lüdtke et al., 2009). Both genetic and environment factors have been suggested to contribute to the heterogeneities. For example, heritability of personality and regional brain structures has been suggested to contribute to heterogeneous associations between the two (Nostro et al., 2017; Valk et al., 2020). On the other hand, ample research has also demonstrated that both personality and brain structure are susceptible to change by the environment and experiences (e.g., Montag, Reuter, et al., 2013; Roberts & Mroczek, 2008). Third, considering the highly heterogeneous nature and individual differences shaped by genetic and environment in personality traits, it is also possible that the global dimension of the Big Five personality traits is too broad to have the universal representation in the brain. Based on NEO-PI-R (Costa & McCrae, 1992), each of the five personality traits is constructed by six facets. Studies have demonstrated that some trait facets contribute stronger, relative to the remaining facets for a given global trait, to the association between a certain global trait and brain structure (Bjørnebekk et al., 2013; M. Li et al., 2019). However, only a few studies included in the systematic review conducted additional facet analysis. Future research is recommended to examine the facets and variances of personality traits and brain structures and studies with regular follow-up are needed to evaluate the longitudinal changes.

3.2.5.3. Considerations of statistical approach and power

The highly heterogeneous nature of personality and brain structure also raises the concern of statistical power of the previous literature to detect reliable associations between psychological phenotype and brain structure (Masouleh et al., 2019). An important aspect of statistical power relates to the image data analytic approach used. Although a voxel- or vertex-based approach could potentially provide more precise localization (T. Li et al., 2017), this also raises the concern of overestimating the statistical effect based on a peak voxel/vertex (Allen & DeYoung, 2017; DeYoung et al., 2010). On the other hand, with the advantage of improving signal-to-noise ratio, improving test-retest reliability, and reducing the number of variables, the use of whole-brain parcellation-based approach has been increased (e.g., Eickhoff et al., 2018; Hyatt et al., 2019; T. Li et al., 2017). Future research should carefully weigh the advantages and limitations of different image analytic approaches and possibly report on the congruency of their findings across multiple analysis methodologies.

3.3. Does a meaningful relation between the big five and brain structure really exist?

Having addressed several plausible factors contributing to heterogeneous findings and replication failure, we also consider the possibility that there is no meaningful relation between the Big Five personality traits and brain structure. Indeed, consistent null-to-very-small associations between the Big Five personality and brain structures have been reported by recent large-scale studies with over 1,100 participants (Avinun et al., 2020; Gray et al., 2018). For example, Avinun et al. (2020) investigated both global and facet levels of Big Five personality with structural indices from whole-brain parcellation (cortical CT, cortical SA, and subcortical GMV) and reported only conscientiousness (R 2 = .0044) (and its dutifulness facet (R 2 = .0062)) showed a small association with regional SA in superior temporal region. A recent study by Hyatt et al. (2021) of different levels of personality (from meta-traits, global Big Five traits, facets, and individual NEO-FFI items) and different levels of structural measures (from global brain measures to regional cortical and subcortical parcellations) reported that even the largest association (between Intellect facet of openness and global brain measures) yielded a mean effect size that was less than .05 (estimated by R 2 ). As we discussed above, alternative ways to assess brain structural features, such as connectome and network approaches, exist, and these may be better suited to map correspondences to complex traits than traditional approaches.

Our review of the literature was limited to studies of the Big Five personality model, which emerged from lexical analyses. The model serves the descriptive purpose but is not necessary explanatory (Deyoung & Gray, 2009; Montag & Davis, 2018). Thus, there is no a priori reason why these constructs should map neatly onto biological systems, although the Big Five traits are associated with biologically based constructs such as Gray’s Reinforcement Sensitivity Theory (RST) and Behavioral Inhibition and Approach System (BIS/BAS) (McNaughton & Corr, 2004), Panksepp’s Affective Neuroscience Theory (ANT) (Montag et al., 2021; Montag & Davis, 2018), and the dimensions of extraversion and neuroticism in Eysenck’s model (Eysenck, 1967). For example, Vecchione and colleagues (2021), applied a latent-variable analysis approach to a sample of 330 adults who completed both Carver and White’s BIS/BAS and the Big Five Inventory. These authors found that BIS correlated with emotional stability (inverse of neuroticism) and that BAS correlated with extraversion, after controlling for higher-order factors. Moreover, Montag and Panksepp (2017) demonstrated that seven ANT primary emotional systems are respectively associated with at least one global dimension of the Big Five, such as FEAR, ANGER, and SADNESS with neuroticism, PLAY with extraversion, CARE and ANGER with agreeableness, and SEEKING with openness. Considering that Big Five model closely maps onto biological motivational and emotional systems, future work should include side-by-side comparisons and integrate across conceptual models of personality structure (such as Big Five, RST, and ANT) to provide a comprehensive picture of personality. This could be an iterative process by which future personality models would continue to be refined by neural data and guide the next generation of imaging and other biological (e.g., genetic) studies.

3.4. Limitations

Some limitations should be noted when interpreting the present systematic review and meta-analysis results. First, one of the major challenges of meta-analysis is the trade-off between meta-analysis power and homogeneity of the included studies (Müller et al., 2018). Although no study, to our knowledge, has empirically evaluated the minimal number of studies required for meta-analysis using SDM, Eickhoff et al. (2016) suggested that between 17 to 20 studies are required to achieve adequate power using activation likelihood estimator (ALE) meta-analysis, although whether this result is transferrable to SDM meta-analysis remains to be determined. From the present meta-analysis for five personality traits and GMV studies, we maximized the number of studies by including heterogeneous studies such that studies with patient cohorts, measuring GMD, using T1w/T2w ratio signals, and so on, and we demonstrated that the results remained unchanged with more homogeneous sub-group meta-analysis by excluding the abovementioned studies. Second, the present meta-analysis results were derived from the reported peaks, rather than raw data, and this limits our evaluation of variability within each individual study. Lastly, the present review only included peer-reviewed articles. “Grey literature,” which refers to studies not captured by traditional database and/or commercial publishers, should be also considered to avoid bias when synthesizing the literature (Cooper et al., 2009). Previous review has found that the peer-reviewed published works had average greater effects and more significant results, compared to unpublished works like theses and dissertations (McLeod & Weisz, 2004; Webb & Sheeran, 2006). It is therefore unlikely that our general conclusions of lacking associations between the Big Five and structural brain measures would be altered by the inclusion of unpublished studies. Future researchers are encouraged to include studies from various sources and to carefully evaluate the quality of all works to provide reliable review.

3.5. Implication and conclusion

To our knowledge, this is the first study to systematically evaluate the entire published literature of the association between the Big Five personality traits and three brain structural indices, using a combination of qualitative and quantitative approaches. Qualitative results suggested highly heterogeneous findings, and the quantitative results found no replicable results across studies. Our discussion pointed out methodological limitations, the dearth of direct replications, as well as gaps in the extant literature, such as limited data on trait facets, on brain-personality associations across the life span, and on sex differences.

When it comes to the relation of Big Five personality and structural brain measures, the field of Personality Neuroscience may have come to a crossroads. In fact, the challenge of finding meaningful and replicable brain–behavior relations is not unique to Big Five personality traits. The same challenge has also emerged in other psychological constructs, including, but not limited to, intelligence and cognition (e.g., attention, executive function), psychosocial processes (e.g., political orientation, moral), and psychopathology (e.g., anxiety, internalizing, externalizing) (Boekel et al., 2015; Genon et al., 2017; Marek et al., 2020; Masouleh et al., 2019). On the one hand, the lack of any significant associations discourages further efforts down this path, as resources may be better spent on following other leads. On the other hand, we suggested several ways to strengthen future work investigating personality–brain structure associations. Consilience may be attained by parallel processing: Expanding upon next-generation structural imaging and analysis approaches, while developing new models of personality informed by cutting-edge data prescribing biological constraints. This may be best accomplished by coming to a consensus as a field on how we can best strengthen methodological rigor and replicability and creating an incentive structure that rewards large-scale consortia building in parallel to smaller-scale creative innovations in methods and constructs.