Regulators Captured

The Wall Street Journal, August 24, 2012, on page A10

http://online.wsj.com/article/SB10000872396390444812704577607421541441692.html

Economist George Stigler described the process of "regulatory capture," in which government agencies end up serving the industries they are supposed to regulate. This week lobbyists for money-market mutual funds provided still more evidence that Stigler deserved his Nobel. At the Securities and Exchange Commission, three of the five commissioners blocked a critical reform to help prevent a taxpayer bailout like the one the industry received in 2008.

Assistant editorial page editor James Freeman on the SEC's nixing a proposed rule that would hold money market funds more accountable.

SEC rules have long allowed money-fund operators to employ an accounting fiction that makes their funds appear safer than they are. Instead of share prices that fluctuate, like other kinds of securities, money funds are allowed to report to customers a fixed net asset value (NAV) of $1 per share—even if that's not exactly true.

As long as the value of a fund's underlying assets doesn't stray too far from that magical figure, fund sponsors can present a picture of stability to customers. Money funds are often seen as competitors to bank accounts and now hold $1.6 trillion in assets.

But during times of crisis, as in 2008, investors are reminded how different money funds are from insured deposits. When one fund "broke the buck"—its asset value fell below $1 per share—it triggered an institutional run on all money funds. The Treasury responded by slapping a taxpayer guarantee on the whole industry.

SEC Chairman Mary Schapiro has been trying to eliminate this systemic risk by taking away the accounting fiction that was created when previous generations of lobbyists captured the SEC. She made the sensible case that money-fund prices should float like the securities they are.

But industry lobbyists are still holding hostages. Commissioners Luis Aguilar, Dan Gallagher and Troy Paredes refused to support reform, so taxpayers can expect someday a replay of 2008. True to the Stigler thesis, the debate has focused on how to maintain the current money-fund business model while preventing customers from leaving in a crisis. The SEC goal should be to craft rules so that when customers leave a fund, it is a problem for fund managers, not taxpayers.

The industry shrewdly lobbied Beltway conservatives, who bought the line that this was a defense against costly regulation, even though regulation more or less created the money-fund industry. Free-market think tanks have been taken for a ride, some of them all too willingly.

The big winners include dodgy European banks, which can continue to attract U.S. money funds chasing higher yields knowing the American taxpayer continues to offer an implicit guarantee.

The industry shouldn't celebrate too much, though, because regulation may now be imposed by the new Financial Stability Oversight Council. Federal Reserve and Treasury officials want to do something, and their preference will probably be more supervision and capital positions that will raise costs that the industry can pass along to consumers. By protecting the $1 fixed NAV, free-marketeers may have guaranteed more of the Dodd-Frank-style regulation they claim to abhor.

The losers include the efficiency and fairness of the U.S. economy, as another financial industry gets government to guarantee its business model. Congratulations.

Showing posts with label irrationalism. Show all posts

Showing posts with label irrationalism. Show all posts

Friday, August 24, 2012

Saturday, June 30, 2012

Jonathan Haidt's The Righteous Mind: Why Good People Are Divided by Politics and Religion

Jonathan Haidt: He Knows Why We Fight. By Holman W Jenkins, Jr

Conservative or liberal, our moral instincts are shaped by evolution to strengthen 'us' against 'them.'

The Wall Street Journal, June 30, 2012, page A13

http://online.wsj.com/article/SB10001424052702303830204577446512522582648.html

Nobody who engages in political argument, and who isn't a moron, hasn't had to recognize the fact that decent, honest, intelligent people can come to opposite conclusions on public issues.

Jonathan Haidt, in an eye-opening and deceptively ambitious best seller, tells us why. The reason is evolution. Political attitudes are an extension of our moral reasoning; however much we like to tell ourselves otherwise, our moral responses are basically instinctual, despite attempts to gussy them up with ex-post rationalizations.

Our constellation of moral instincts arose because it helped us to cooperate. It helped us, in unprecedented speed and fashion, to dominate our planet. Yet the same moral reaction also means we exist in a state of perpetual, nasty political disagreement, talking past each other, calling each other names.

So Mr. Haidt explains in "The Righteous Mind: Why Good People Are Divided by Politics and Religion," undoubtedly one of the most talked-about books of the year. "The Righteous Mind" spent weeks on the hardcover best-seller list. Mr. Haidt considers himself mostly a liberal, but his book has been especially popular in the conservative blogosphere. Some right-leaning intellectuals are even calling it the most important book of the year.

It's full of ammunition that conservatives will love to throw out at cocktail parties. His research shows that conservatives are much better at understanding and anticipating liberal attitudes than liberals are at appreciating where conservatives are coming from. Case in point: Conservatives know that liberals are repelled by cruelty to animals, but liberals don't think (or prefer not to believe) that conservatives are repelled too.

Mr. Haidt, until recently a professor of moral psychology at the University of Virginia, says the surveys conducted by his research team show that liberals are strong on evolved values he defines as caring and fairness. Conservatives value caring and fairness too but tend to emphasize the more tribal values like loyalty, authority and sanctity.

Conservatives, Mr. Haidt says, have been more successful politically because they play to the full spectrum of sensibilities, and because the full spectrum is necessary for a healthy society. An admiring review in the New York Times sums up this element of his argument: "Liberals dissolve moral capital too recklessly. Welfare programs that substitute public aid for spousal and parental support undermine the ecology of the family. Education policies that let students sue teachers erode classroom authority. Multicultural education weakens the cultural glue of assimilation."

Such a book is bound to run into the charge of scientism—claiming scientific authority for a mix of common sense, exhortation or the author's own preferences. Let it be said that Mr. Haidt is sensitive to this complaint. If he erred, he says, it was on the side of being accessible, readable and, he hopes, influential.

As we sit in his new office at New York University, he professes an immodest aim: He wants liberals and conservatives to listen to each other more, hate each other less, and to understand that their differences are largely rooted in psychology, not open-minded consideration of the facts. "My big issue, the one I'm somewhat evangelical about, is civil disagreement," he says.

A shorthand he uses is "follow the sacred"—and not in a good way. "Follow the sacred and there you will find a circle of motivated ignorance." Today's political parties are most hysterical, he says, on the issues they "sacralize." For the right, it's taxes. For the left, the sacred issues were race and gender but are becoming global warming and gay marriage.

Yet between the lines of his book is an even more dramatic claim: The same moral psychology that makes our politics so nasty also underlies the amazing triumph of the human species. "We shouldn't be here at all," he tells me. "When I think about life on earth, there should not be a species like us. And if there was, we should be out in the jungle killing each other in small groups. That's what you should expect. The fact that we're here [in politics] arguing viciously and nastily with each other, and no guns, that itself is a miracle. And I think we can make [our politics] a little better. That's my favorite theme."

Who is Jon Haidt? A nice Jewish boy from central casting, he grew up in Scarsdale, N.Y. His father was a corporate lawyer. "When the economy opened out in the '50s and '60s and Jews could go everywhere, he was part of that generation. He and all his buddies from Brooklyn did very well."

His family was liberal in the FDR tradition. At Yale he studied philosophy and, in standard liberal fashion, "emerged pretty convinced that I was right about everything." It took a while for him to discover the limits of that stance. "I wouldn't say I was mugged by reality. I would say I was gradually introduced to it academically," he says today.

In India, where he performed field studies early in his professional career, he encountered a society in some ways patriarchal, sexist and illiberal. Yet it worked and the people were lovely. In Brazil, he paid attention to the experiences of street children and discovered the "most dangerous person in the world is mom's boyfriend. When women have a succession of men coming through, their daughters will get raped," he says. "The right is right to be sounding the alarm about the decline of marriage, and the left is wrong to say, 'Oh, any kind of family is OK.' It's not OK."

At age 41, he decided to try to understand what conservatives think. The quest was part of his effort to apply his understanding of moral psychology to politics. He especially sings the praises of Thomas Sowell's "Conflict of Visions," which he calls "an incredible book, a brilliant portrayal" of the argument between conservatives and liberals about the nature of man. "Again, as a moral psychologist, I had to say the constrained vision [of human nature] is correct."

That is, our moral instincts are tribal, adaptive, intuitive and shaped by evolution to strengthen "us" against "them." He notes that, in the 1970s, the left tended to be categorically hostile to evolutionary explanations of human behavior. Yet Mr. Haidt, the liberal and self-professed atheist, says he now finds the conservative vision speaks more insightfully to our evolved nature in ways that it would be self-defeating to discount.

"This is what I'm trying to argue for, and this is what I feel I've discovered from reading a lot of the sociology," he continues. "You need loyalty, authority and sanctity"—values that liberals are often suspicious of—"to run a decent society."

Mr. Haidt, a less chunky, lower-T version of Adam Sandler, has just landed a new position at the Stern School of Business at NYU. He arrived with his two children and wife, Jane, after a successful and happy 16-year run at the University of Virginia. An introvert by his own account, and never happier than when laboring in solitude, he nevertheless sought out the world's media capital to give wider currency to the ideas in the "The Righteous Mind."

Mr. Haidt's book, as he's the first to notice, has given comfort to conservatives. Its aim is to help liberals. Though he calls himself a centrist, he remains a strongly committed Democrat. He voted for one Republican in his life—in 2000 crossing party lines to cast a ballot for John McCain in the Virginia primary. "I wasn't trying to mess with the Republican primary," he adds. "I really liked McCain."

His disappointment with President Obama is quietly evident. Ronald Reagan understood that "politics is more like religion than like shopping," he says. Democrats, after a long string of candidates who flogged policy initiatives like items in a Wal-Mart circular, finally found one who could speak to higher values than self-interest. "Obama surely had a chance to remake the Democratic Party. But once he got in office, I think, he was consumed with the difficulty of governing within the Beltway."

The president has reverted to the formula of his party—bound up in what Mr. Haidt considers obsolete interest groups, battles and "sacred" issues about which Democrats cultivate an immunity to compromise.

Mr. Haidt lately has been speaking to Democratic groups and urging attachment to a new moral vision, albeit one borrowed from the Andrew Jackson campaign of 1828: "Equal opportunity for all, special privileges for none."

Racial quotas and reflexive support for public-sector unions would be out. His is a reformed vision of a class-based politics of affirmative opportunity for the economically disadvantaged. "I spoke to some Democrats about things in the book and they asked, how can we weaponize this? My message to them was: You're not ready. You don't know what you stand for yet. You don't have a clear moral vision."

Like many historians of modern conservatism, he cites the 1971 Powell Memo—written by the future Supreme Court Justice Lewis Powell Jr.—which rallied Republicans to the defense of free enterprise and limited government. Democrats need their own version of the Powell Memo today to give the party a new and coherent moral vision of activist government in the good society. "The moral rot a [traditional] liberal welfare state creates over generations—I mean, the right is right about that," says Mr. Haidt, "and the left can't see it."

Yet one challenge becomes apparent in talking to Mr. Haidt: He's read his book and cheerfully acknowledges that he avoids criticizing too plainly the "sacralized" issues of his liberal friends.

In his book, for instance, is passing reference to Western Europe's creation of the world's "first atheistic societies," also "the least efficient societies ever known at turning resources (of which they have a lot) into offspring (of which they have very few)."

What does he actually mean? He means Islam: "Demographic curves are very hard to bend," he says. "Unless something changes in Europe in the next century, it will eventually be a Muslim continent. Let me say it diplomatically: Most religions are tribal to some degree. Islam, in its holy books, seems more so. Christianity has undergone a reformation and gotten some distance from its holy books to allow many different lives to flourish in Christian societies, and this has not happened in Islam."

Mr. Haidt is similarly tentative in spelling out his thoughts on global warming. The threat is real, he suspects, and perhaps serious. "But the left is now embracing this as their sacred issue, which guarantees that there will be frequent exaggerations and minor—I don't want to call it fudging of data—but there will be frequent mini-scandals. Because it's a moral crusade, the left is going to have difficulty thinking clearly about what to do."

Mr. Haidt, I observe, is noticeably less delicate when stepping on the right's toes. He reviles George W. Bush, whom he blames for running up America's debt and running down its reputation. He blames Newt Gingrich for perhaps understanding his book's arguments too well and importing an uncompromising moralistic language into the partisan politics of the 1990s.

Mr. Haidt also considers today's Republican Party a curse upon the land, even as he admires conservative ideas. He says its defense of lower taxes on capital income—mostly reported by the rich—is indefensible. He dismisses Mitt Romney as a "moral menial," a politician so cynical about the necessary cynicism of politics that he doesn't bother to hide his cynicism. (Some might call that a virtue.) He finds it all too typical that Republicans abandoned their support of the individual health-care mandate the moment Mr. Obama picked it up (though he also finds Chief Justice John Roberts's bend-over-backwards effort to preserve conservative constitutional principle while upholding ObamaCare "refreshing").

Why is his language so much less hedged when discussing Republicans? "Liberals are my friends, my colleagues, my social world," he concedes. Liberals also are the audience he hopes most to influence, helping Democrats to recalibrate their political appeal and their attachment to a faulty welfare state.

To which a visitor can only say, God speed. Even with his parsing out of deep psychological differences between conservatives and liberals, American politics still seem capable of a useful fluidity. To make progress we need both parties, and right now we could use some progress on taxes, incentives, growth and entitlement reform.

Mr. Jenkins writes the Journal's Business World column.

Conservative or liberal, our moral instincts are shaped by evolution to strengthen 'us' against 'them.'

The Wall Street Journal, June 30, 2012, page A13

http://online.wsj.com/article/SB10001424052702303830204577446512522582648.html

Nobody who engages in political argument, and who isn't a moron, hasn't had to recognize the fact that decent, honest, intelligent people can come to opposite conclusions on public issues.

Jonathan Haidt, in an eye-opening and deceptively ambitious best seller, tells us why. The reason is evolution. Political attitudes are an extension of our moral reasoning; however much we like to tell ourselves otherwise, our moral responses are basically instinctual, despite attempts to gussy them up with ex-post rationalizations.

Our constellation of moral instincts arose because it helped us to cooperate. It helped us, in unprecedented speed and fashion, to dominate our planet. Yet the same moral reaction also means we exist in a state of perpetual, nasty political disagreement, talking past each other, calling each other names.

So Mr. Haidt explains in "The Righteous Mind: Why Good People Are Divided by Politics and Religion," undoubtedly one of the most talked-about books of the year. "The Righteous Mind" spent weeks on the hardcover best-seller list. Mr. Haidt considers himself mostly a liberal, but his book has been especially popular in the conservative blogosphere. Some right-leaning intellectuals are even calling it the most important book of the year.

It's full of ammunition that conservatives will love to throw out at cocktail parties. His research shows that conservatives are much better at understanding and anticipating liberal attitudes than liberals are at appreciating where conservatives are coming from. Case in point: Conservatives know that liberals are repelled by cruelty to animals, but liberals don't think (or prefer not to believe) that conservatives are repelled too.

Mr. Haidt, until recently a professor of moral psychology at the University of Virginia, says the surveys conducted by his research team show that liberals are strong on evolved values he defines as caring and fairness. Conservatives value caring and fairness too but tend to emphasize the more tribal values like loyalty, authority and sanctity.

Conservatives, Mr. Haidt says, have been more successful politically because they play to the full spectrum of sensibilities, and because the full spectrum is necessary for a healthy society. An admiring review in the New York Times sums up this element of his argument: "Liberals dissolve moral capital too recklessly. Welfare programs that substitute public aid for spousal and parental support undermine the ecology of the family. Education policies that let students sue teachers erode classroom authority. Multicultural education weakens the cultural glue of assimilation."

Such a book is bound to run into the charge of scientism—claiming scientific authority for a mix of common sense, exhortation or the author's own preferences. Let it be said that Mr. Haidt is sensitive to this complaint. If he erred, he says, it was on the side of being accessible, readable and, he hopes, influential.

As we sit in his new office at New York University, he professes an immodest aim: He wants liberals and conservatives to listen to each other more, hate each other less, and to understand that their differences are largely rooted in psychology, not open-minded consideration of the facts. "My big issue, the one I'm somewhat evangelical about, is civil disagreement," he says.

A shorthand he uses is "follow the sacred"—and not in a good way. "Follow the sacred and there you will find a circle of motivated ignorance." Today's political parties are most hysterical, he says, on the issues they "sacralize." For the right, it's taxes. For the left, the sacred issues were race and gender but are becoming global warming and gay marriage.

Yet between the lines of his book is an even more dramatic claim: The same moral psychology that makes our politics so nasty also underlies the amazing triumph of the human species. "We shouldn't be here at all," he tells me. "When I think about life on earth, there should not be a species like us. And if there was, we should be out in the jungle killing each other in small groups. That's what you should expect. The fact that we're here [in politics] arguing viciously and nastily with each other, and no guns, that itself is a miracle. And I think we can make [our politics] a little better. That's my favorite theme."

Who is Jon Haidt? A nice Jewish boy from central casting, he grew up in Scarsdale, N.Y. His father was a corporate lawyer. "When the economy opened out in the '50s and '60s and Jews could go everywhere, he was part of that generation. He and all his buddies from Brooklyn did very well."

His family was liberal in the FDR tradition. At Yale he studied philosophy and, in standard liberal fashion, "emerged pretty convinced that I was right about everything." It took a while for him to discover the limits of that stance. "I wouldn't say I was mugged by reality. I would say I was gradually introduced to it academically," he says today.

In India, where he performed field studies early in his professional career, he encountered a society in some ways patriarchal, sexist and illiberal. Yet it worked and the people were lovely. In Brazil, he paid attention to the experiences of street children and discovered the "most dangerous person in the world is mom's boyfriend. When women have a succession of men coming through, their daughters will get raped," he says. "The right is right to be sounding the alarm about the decline of marriage, and the left is wrong to say, 'Oh, any kind of family is OK.' It's not OK."

At age 41, he decided to try to understand what conservatives think. The quest was part of his effort to apply his understanding of moral psychology to politics. He especially sings the praises of Thomas Sowell's "Conflict of Visions," which he calls "an incredible book, a brilliant portrayal" of the argument between conservatives and liberals about the nature of man. "Again, as a moral psychologist, I had to say the constrained vision [of human nature] is correct."

That is, our moral instincts are tribal, adaptive, intuitive and shaped by evolution to strengthen "us" against "them." He notes that, in the 1970s, the left tended to be categorically hostile to evolutionary explanations of human behavior. Yet Mr. Haidt, the liberal and self-professed atheist, says he now finds the conservative vision speaks more insightfully to our evolved nature in ways that it would be self-defeating to discount.

"This is what I'm trying to argue for, and this is what I feel I've discovered from reading a lot of the sociology," he continues. "You need loyalty, authority and sanctity"—values that liberals are often suspicious of—"to run a decent society."

Mr. Haidt, a less chunky, lower-T version of Adam Sandler, has just landed a new position at the Stern School of Business at NYU. He arrived with his two children and wife, Jane, after a successful and happy 16-year run at the University of Virginia. An introvert by his own account, and never happier than when laboring in solitude, he nevertheless sought out the world's media capital to give wider currency to the ideas in the "The Righteous Mind."

Mr. Haidt's book, as he's the first to notice, has given comfort to conservatives. Its aim is to help liberals. Though he calls himself a centrist, he remains a strongly committed Democrat. He voted for one Republican in his life—in 2000 crossing party lines to cast a ballot for John McCain in the Virginia primary. "I wasn't trying to mess with the Republican primary," he adds. "I really liked McCain."

His disappointment with President Obama is quietly evident. Ronald Reagan understood that "politics is more like religion than like shopping," he says. Democrats, after a long string of candidates who flogged policy initiatives like items in a Wal-Mart circular, finally found one who could speak to higher values than self-interest. "Obama surely had a chance to remake the Democratic Party. But once he got in office, I think, he was consumed with the difficulty of governing within the Beltway."

The president has reverted to the formula of his party—bound up in what Mr. Haidt considers obsolete interest groups, battles and "sacred" issues about which Democrats cultivate an immunity to compromise.

Mr. Haidt lately has been speaking to Democratic groups and urging attachment to a new moral vision, albeit one borrowed from the Andrew Jackson campaign of 1828: "Equal opportunity for all, special privileges for none."

Racial quotas and reflexive support for public-sector unions would be out. His is a reformed vision of a class-based politics of affirmative opportunity for the economically disadvantaged. "I spoke to some Democrats about things in the book and they asked, how can we weaponize this? My message to them was: You're not ready. You don't know what you stand for yet. You don't have a clear moral vision."

Like many historians of modern conservatism, he cites the 1971 Powell Memo—written by the future Supreme Court Justice Lewis Powell Jr.—which rallied Republicans to the defense of free enterprise and limited government. Democrats need their own version of the Powell Memo today to give the party a new and coherent moral vision of activist government in the good society. "The moral rot a [traditional] liberal welfare state creates over generations—I mean, the right is right about that," says Mr. Haidt, "and the left can't see it."

Yet one challenge becomes apparent in talking to Mr. Haidt: He's read his book and cheerfully acknowledges that he avoids criticizing too plainly the "sacralized" issues of his liberal friends.

In his book, for instance, is passing reference to Western Europe's creation of the world's "first atheistic societies," also "the least efficient societies ever known at turning resources (of which they have a lot) into offspring (of which they have very few)."

What does he actually mean? He means Islam: "Demographic curves are very hard to bend," he says. "Unless something changes in Europe in the next century, it will eventually be a Muslim continent. Let me say it diplomatically: Most religions are tribal to some degree. Islam, in its holy books, seems more so. Christianity has undergone a reformation and gotten some distance from its holy books to allow many different lives to flourish in Christian societies, and this has not happened in Islam."

Mr. Haidt is similarly tentative in spelling out his thoughts on global warming. The threat is real, he suspects, and perhaps serious. "But the left is now embracing this as their sacred issue, which guarantees that there will be frequent exaggerations and minor—I don't want to call it fudging of data—but there will be frequent mini-scandals. Because it's a moral crusade, the left is going to have difficulty thinking clearly about what to do."

Mr. Haidt, I observe, is noticeably less delicate when stepping on the right's toes. He reviles George W. Bush, whom he blames for running up America's debt and running down its reputation. He blames Newt Gingrich for perhaps understanding his book's arguments too well and importing an uncompromising moralistic language into the partisan politics of the 1990s.

Mr. Haidt also considers today's Republican Party a curse upon the land, even as he admires conservative ideas. He says its defense of lower taxes on capital income—mostly reported by the rich—is indefensible. He dismisses Mitt Romney as a "moral menial," a politician so cynical about the necessary cynicism of politics that he doesn't bother to hide his cynicism. (Some might call that a virtue.) He finds it all too typical that Republicans abandoned their support of the individual health-care mandate the moment Mr. Obama picked it up (though he also finds Chief Justice John Roberts's bend-over-backwards effort to preserve conservative constitutional principle while upholding ObamaCare "refreshing").

Why is his language so much less hedged when discussing Republicans? "Liberals are my friends, my colleagues, my social world," he concedes. Liberals also are the audience he hopes most to influence, helping Democrats to recalibrate their political appeal and their attachment to a faulty welfare state.

To which a visitor can only say, God speed. Even with his parsing out of deep psychological differences between conservatives and liberals, American politics still seem capable of a useful fluidity. To make progress we need both parties, and right now we could use some progress on taxes, incentives, growth and entitlement reform.

Mr. Jenkins writes the Journal's Business World column.

Tuesday, May 15, 2012

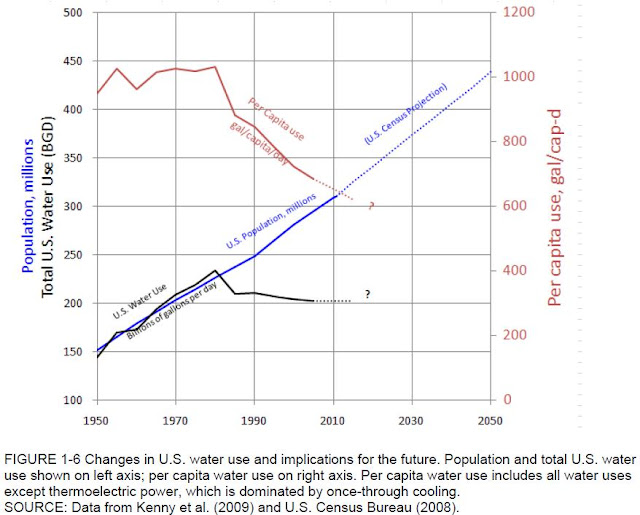

Changes in U.S. water use and implications for the future

It is interesting to see some data in Water Reuse: Expanding the Nation's Water Supply Through Reuse of Municipal Wastewater (http://www.nap.edu/catalog.php?record_id=13303), a National Research Council publication.

See for example figure 1-6, p 17, changes in U.S. water use and implications for the future:

See for example figure 1-6, p 17, changes in U.S. water use and implications for the future:

Thursday, February 23, 2012

Can Institutional Reform Reduce Job Destruction and Unemployment Duration?

Can Institutional Reform Reduce Job Destruction and Unemployment Duration? Yes It Can. By Esther Perez & Yao Yao

IMF Working Paper No. 12/54

February 2012

http://www.imf.org/external/pubs/cat/longres.aspx?sk=25738.0

Summary: We read search theory’s unemployment equilibrium condition as an Iso-Unemployment Curve(IUC).The IUC is the locus of job destruction rates and expected unemployment durations rendering the same unemployment level. A country’s position along the curve reveals its preferences over the destruction-duration mix, while its distance from the origin indicates the unemployment level at which such preferences are satisfied Using a panel of 20 OECD countries over 1985-2008, we find employment protection legislation to have opposing efects on destructions and durations, while the effects of the remaining key institutional factors on both variables tend to reinforce each other. Implementing the right reforms could reduce job destruction rates by about 0.05 to 0.25 percentage points and shorten unemployment spells by around 10 to 60 days. Consistent with this, unemployment rates would decline by between 0.75 and 5.5 percentage points, depending on a country’s starting position.

Introduction

This paper investigates how labor market policies affect the unemployment rate through its two defining factors, the duration of unemployment spells and job destruction rates. To this aim, we look at search theory’s unemployment equilibrium condition as an Iso-Unemployment Curve (IUC). The IUC represents the locus of job destruction rates and expected unemployment durations rendering the same unemployment level. A country’s position along the curve reveals its preferences over the destruction-duration mix, while its distance from the origin indicates the unemployment level at which such preferences are satisfied. We next provide micro-foundations for the link between destructions, durations and policy variables. This allows us to explore the relevance of institutional features using a sample of 20 OECD countries over the period 1985-2008.

The empirical literature investigating the influence of labor market institutions on overall unemployment rate is sizable (see, for instance, Blanchard and Wolfers, 1999, and Nickell and others, 2002). Equally numerous are the studies splitting unemployment into job creation and job destruction flows (see, for example, Blanchard, 1998, Shimer, 2007, and Elsby and others, 2008). This work connects these two strands of the literature by investigating how labor market policies shape both job separations and unemployment spells, which together determine the overall unemployment rate in the economy. The IUC schedule used in our analysis is novel and is motivated by the need to understand the nature of unemployment, as essentially coming from destructions, durations or a combination of both these factors. This can help clarify whether policy makers should focus primarily on speeding up workers’ reallocation across job positions rather than protecting them in the workplace.

One fundamental question raised in this context is whether countries with dynamic labor markets significantly outperform countries with more stagnant markets. By dynamic (stagnant) we mean labor markets displaying high (low) levels of workers’ turnover in and out of unemployment. Is it the case that countries featuring high job destruction rates but brief unemployment spells tend to display lower unemployment rates than labor markets characterized by limited job destruction but longer unemployment durations? And how do institutional features shape destructions and durations?

Conclusions

This paper reads the basic unemployment equilibrium condition postulated by search theory as an Iso-Unemployment Curve (IUC). The IUC is the locus of job destruction rates and expected unemployment durations that render the same unemployment level. We use this schedule to classify countries according to their preferences over the job destruction-unemployment duration trade-off. The upshot of this analysis is that labor markets characterized by high levels of job destruction but brief unemployment spells do not necessarily outperform countries characterized by the opposite behavior. But, the IUC construct makes it clear that high unemployment rates result from extreme values in either durations or destructions, or intermediate-to-high levels in both.

Looking at unemployment through the lenses of the IUC schedule focuses the attention on each economy’s revealed social preferences over the destruction-duration mix. Policy packages fighting unemployment should take into consideration such preferences. Some countries seem to tolerate relatively high destruction rates as long as unemployment duration is short. Others are biased towards job security and do not mind financing longer job search spells. A few unfortunate countries are trapped in a high inflow-high duration combination, seemingly condemned for long periods of high unemployment.

An optimistic message arising from this study, especially for countries located on higher IUCs, is that an ambitious structural reform program tackling high labor tax wedges, activating unemployment benefits and removing barriers to competition in key services can effectively contain job losses, limit the duration of unemployment spells and yield substantial reduction in unemployment.

IMF Working Paper No. 12/54

February 2012

http://www.imf.org/external/pubs/cat/longres.aspx?sk=25738.0

Summary: We read search theory’s unemployment equilibrium condition as an Iso-Unemployment Curve(IUC).The IUC is the locus of job destruction rates and expected unemployment durations rendering the same unemployment level. A country’s position along the curve reveals its preferences over the destruction-duration mix, while its distance from the origin indicates the unemployment level at which such preferences are satisfied Using a panel of 20 OECD countries over 1985-2008, we find employment protection legislation to have opposing efects on destructions and durations, while the effects of the remaining key institutional factors on both variables tend to reinforce each other. Implementing the right reforms could reduce job destruction rates by about 0.05 to 0.25 percentage points and shorten unemployment spells by around 10 to 60 days. Consistent with this, unemployment rates would decline by between 0.75 and 5.5 percentage points, depending on a country’s starting position.

Introduction

This paper investigates how labor market policies affect the unemployment rate through its two defining factors, the duration of unemployment spells and job destruction rates. To this aim, we look at search theory’s unemployment equilibrium condition as an Iso-Unemployment Curve (IUC). The IUC represents the locus of job destruction rates and expected unemployment durations rendering the same unemployment level. A country’s position along the curve reveals its preferences over the destruction-duration mix, while its distance from the origin indicates the unemployment level at which such preferences are satisfied. We next provide micro-foundations for the link between destructions, durations and policy variables. This allows us to explore the relevance of institutional features using a sample of 20 OECD countries over the period 1985-2008.

The empirical literature investigating the influence of labor market institutions on overall unemployment rate is sizable (see, for instance, Blanchard and Wolfers, 1999, and Nickell and others, 2002). Equally numerous are the studies splitting unemployment into job creation and job destruction flows (see, for example, Blanchard, 1998, Shimer, 2007, and Elsby and others, 2008). This work connects these two strands of the literature by investigating how labor market policies shape both job separations and unemployment spells, which together determine the overall unemployment rate in the economy. The IUC schedule used in our analysis is novel and is motivated by the need to understand the nature of unemployment, as essentially coming from destructions, durations or a combination of both these factors. This can help clarify whether policy makers should focus primarily on speeding up workers’ reallocation across job positions rather than protecting them in the workplace.

One fundamental question raised in this context is whether countries with dynamic labor markets significantly outperform countries with more stagnant markets. By dynamic (stagnant) we mean labor markets displaying high (low) levels of workers’ turnover in and out of unemployment. Is it the case that countries featuring high job destruction rates but brief unemployment spells tend to display lower unemployment rates than labor markets characterized by limited job destruction but longer unemployment durations? And how do institutional features shape destructions and durations?

Conclusions

This paper reads the basic unemployment equilibrium condition postulated by search theory as an Iso-Unemployment Curve (IUC). The IUC is the locus of job destruction rates and expected unemployment durations that render the same unemployment level. We use this schedule to classify countries according to their preferences over the job destruction-unemployment duration trade-off. The upshot of this analysis is that labor markets characterized by high levels of job destruction but brief unemployment spells do not necessarily outperform countries characterized by the opposite behavior. But, the IUC construct makes it clear that high unemployment rates result from extreme values in either durations or destructions, or intermediate-to-high levels in both.

Looking at unemployment through the lenses of the IUC schedule focuses the attention on each economy’s revealed social preferences over the destruction-duration mix. Policy packages fighting unemployment should take into consideration such preferences. Some countries seem to tolerate relatively high destruction rates as long as unemployment duration is short. Others are biased towards job security and do not mind financing longer job search spells. A few unfortunate countries are trapped in a high inflow-high duration combination, seemingly condemned for long periods of high unemployment.

An optimistic message arising from this study, especially for countries located on higher IUCs, is that an ambitious structural reform program tackling high labor tax wedges, activating unemployment benefits and removing barriers to competition in key services can effectively contain job losses, limit the duration of unemployment spells and yield substantial reduction in unemployment.

Tuesday, January 31, 2012

Macroeconomic and Welfare Costs of U.S. Fiscal Imbalances

Macroeconomic and Welfare Costs of U.S. Fiscal Imbalances. By Bertrand Gruss and Jose L. Torres

IMF Working Paper No. 12/38

http://www.imf.org/external/pubs/cat/longres.aspx?sk=25691.0

Summary: In this paper we use a general equilibrium model with heterogeneous agents to assess the macroeconomic and welfare consequences in the United States of alternative fiscal policies over the medium-term. We find that failing to address the fiscal imbalances associated with current federal fiscal policies for a prolonged period would result in a significant crowding-out of private investment and a severe drag on growth. Compared to adopting a reform that gradually reduces federal debt to its pre-crisis level, postponing debt stabilization for two decades would entail a permanent output loss of about 17 percent and a welfare loss of almost 7 percent of lifetime consumption. Moreover, the long-run welfare gains from the adjustment would more than compensate the initial losses associated with the consolidation period.

IMF Working Paper No. 12/38

http://www.imf.org/external/pubs/cat/longres.aspx?sk=25691.0

Summary: In this paper we use a general equilibrium model with heterogeneous agents to assess the macroeconomic and welfare consequences in the United States of alternative fiscal policies over the medium-term. We find that failing to address the fiscal imbalances associated with current federal fiscal policies for a prolonged period would result in a significant crowding-out of private investment and a severe drag on growth. Compared to adopting a reform that gradually reduces federal debt to its pre-crisis level, postponing debt stabilization for two decades would entail a permanent output loss of about 17 percent and a welfare loss of almost 7 percent of lifetime consumption. Moreover, the long-run welfare gains from the adjustment would more than compensate the initial losses associated with the consolidation period.

The authors start the paper this way:

“History makes clear that failure to put our fiscal house in order will erode the vitality of our

economy, reduce the standard of living in the United States, and increase the risk of economic and financial instability.”

Ben S. Bernanke, 2011 Annual Conference of the Committee for a Responsible Federal Budget

Results:The long-run effects

What is the effect of delaying fiscal consolidation on...?

What are the effects of alternative fiscal consolidation plans?

Overall Welfare Cost of Delaying Fiscal Consolidation

“History makes clear that failure to put our fiscal house in order will erode the vitality of our

economy, reduce the standard of living in the United States, and increase the risk of economic and financial instability.”

Ben S. Bernanke, 2011 Annual Conference of the Committee for a Responsible Federal Budget

Excerpts

IntroductionOne of the main legacies of the Great Recession has been the sharp deterioration of public finances in most advanced economies. In the U.S., the federal debt held by the public surged from 36 percent of GDP in 2007 to around 70 percent in 2011. This rise in debt, however impressive, gets dwarfed when compared to the medium-term fiscal imbalances associated with entitlement programs and revenue-constraining measures. For example, the non-partisan Congressional Budget Office (CBO) foresees the debt held by the public to exceed 150 percent of GDP by 2030 (see Figure 1). Similarly, Batini et al. (2011) estimate that closing the federal “fiscal gap” associated with current fiscal policies would require a permanent fiscal adjustment of about 15 percent of GDP.

While the crisis brought the need to address the U.S. medium-term fiscal imbalances to the center of the policy debate, the costs they entail are not necessarily well understood. Most of the long-term fiscal projections regularly produced in the U.S. and used to guide policy discussions are derived from debt accounting exercises. A shortcoming of such approach is that relative prices and economic activity are unaffected by different fiscal policies, and that it cannot be used for welfare analysis. To overcome those limitations and contribute to the debate, in this paper we use a rational expectations general equilibrium framework to assess the medium-term macroeconomic and welfare consequences of alternative fiscal policies in the U.S. We find that failing to address the federal fiscal imbalances for a prolonged period would result in a significant crowding-out of private investment and drag on growth, entailing a permanent output loss of about 17 percent and welfare loss of almost 7 percent of lifetime consumption. Moreover, we find that the long-run welfare gains from stabilizing the federal debt at a low level more than compensate the welfare losses associated with the consolidation period. Our results also suggest that the crowding-out effects of public debt are an order of magnitude bigger than the policy mix effects: Reducing promptly the level of public debt is significantly more important for activity and welfare than differences in the size of government or the design of the tax reform.

The focus of this study is on the costs and benefits of fiscal consolidation for the U.S. over the medium-term to long-term. In this sense, we explicitly leave aside some questions on fiscal consolidation that, while very relevant for the short-run, cannot be appropriately tackled in this framework. One example is assessing the effects of back-loading the pace of consolidation in the near term—while announcing a credible medium-run adjustment—in the current context of growth below potential and nominal interest rates close to zero. A related relevant question is what mix of fiscal instruments in the near term would make fiscal consolidation less costly in such context. While interesting, these questions are beyond the scope of this paper.

The quantitative framework we use is a dynamic stochastic general equilibrium model with heterogeneous agents, and endogenous occupational choice and labor supply. In the model, ex-ante identical agents face idiosyncratic entrepreneurial ability and labor productivity shocks, and choose their occupation. Agents can become either entrepreneurs and hire other workers, or they can become workers and decide what fraction of their time to work for other entrepreneurs. In order to make a realistic analysis of the policy options, we assume that the government does not have access to lump sum taxation. Instead, the government raises distortionary taxes on labor, consumption, and income, and issues one period non-contingent bonds to finance lump sum transfers to all agents, other noninterest spending, and service its debt. Given that the core issue threatening debt sustainability in the U.S. is the explosive path of spending on entitlement programs, the heterogeneous agents assumption is crucial: Our model allows for a meaningful tradeoff between distortionary taxation and government transfers, as the latter insure households from attaining very low levels of consumption. The complexity this introduces forces us to sacrifice on some dimension: Agents in our model face individual uncertainty but have perfect foresight about future paths of fiscal instruments and prices. Allowing for uncertainty about the timing and composition of the adjustment would be interesting, but would severely increase the computational cost.

We compare model simulations from four alternative fiscal scenarios. The benchmark scenario maintains current fiscal policies for about twenty years. More precisely, in this scenario we feed the model with the spending (noninterest mandatory and discretionary) and revenue projections from CBO’s Alternative Fiscal scenario (CBO 2011)—allowing all other variables to adjust endogenously—until about 2030, when we assume that the government increases all taxes to stabilize the debt at its prevailing level. Three alternative scenarios assume, instead, the immediate adoption of fiscal reform aimed at gradually reducing the federal debt to its pre-crisis level. There are of course many possible parameterizations for such reform reflecting, among other things, different views about the desired size of the public sector and the design of the tax system. We first consider an adjustment scenario assuming the same size of government and tax structure than the benchmark one in order to disentangle the sole effect of delaying fiscal adjustment—and stabilizing the debt ratio at a high level. We then explore the effect of alternative designs for the consolidation plan by considering two alternative adjustment scenarios that incorporate spending and revenue measures proposed by the bipartisan December 2010 Bowles-Simpson Commission.

This paper is related to different strands of the macro literature on fiscal issues. First, it is related to studies using general equilibrium models to analyze the implications of fiscal consolidations. Forni et al. (2010) use perfect-foresight simulations from a two-country dynamic model to compute the macroeconomic consequences of reducing the debt to GDP ratio in Italy. Coenen et al. (2008) analyze the effects of a permanent reduction in public debt in the Euro Area using the ECB NAWM model. Clinton et al. (2010) use the IMF GIMF model to examine the macroeconomic effects of permanently reducing government fiscal deficits in several regions of the world at the same time. Davig et al. (2010) study the effects of uncertainty about when and how policy will adjust to resolve the exponential growth in entitlement spending in the U.S.

The main difference with our paper is that these works rely on representative agent models that cannot adequately capture the redistributive and insurance effects of fiscal policy. As a result, such models have by construction a positive bias towards fiscal reforms that lower transfers, reduce the debt, and eventually lower the distortions by lowering tax rates. Another unappealing feature of the representative agent models for analyzing the merits of a fiscal consolidation is that, in steady state, the equilibrium real interest rate is independent of the debt level, whereas in our model the equilibrium real interest rate is endogenously affected by the level of government debt, which is consistent with the empirical literature.

Second, the paper is related to previous work using general equilibrium models with infinitively lived heterogeneous agents, occupational choice, and borrowing constraints to analyze fiscal reforms, such as Li(2002), Meh (2005) and Kitao (2008). Differently from these papers, that impose a balanced budget every period, we focus on the effects of debt period of time we augment our model to include growth. Moreover and as in Kitao (2008), we explicitly compute the transitional dynamics after the reforms and analyze the welfare costs associated with the transition. dynamics and fiscal consolidation reforms. Also, since we focus on reforms over an extended period of time we augment our model to include growth. Moreover and as in Kitao (2008), we explicitly compute the transitional dynamics after the reforms and analyze the welfare costs associated with the transition.

Results:The long-run effects

What is the effect of delaying fiscal consolidation on...?

Capital and Labor. The high interest rates in the delay scenario imply that for those entrepreneurs that do not have enough internal funding, the cost of borrowing sufficient capital is too high for them to compensate for their income under the outside option (i.e. wage income). As a result, the share of entrepreneurs in the delay scenario is roughly one half the share under the passive adjust scenario and the aggregate capital stock is about 17 percent lower. The higher share of workers in the delay scenario implies a higher labor supply. Together with a lower labor demand (due to a lower capital stock), this leads to a real wage that is more than 19 percent lower. Total hours worked are similar in the two steady states as lower individual hours offset the higher share of workers.

Output and Consumption. The crowding-out effect of fiscal policy under the delay scenario leads to large permanent losses in output and consumption. The level of GDP is about 16 percent lower in the delay than in the passive adjust scenario and aggregate consumption is 3.5 percent lower. Moreover and as depicted in Figure 4, the wealth distribution is significantly more concentrated under the delay scenario.

Welfare. The effect of lower aggregate consumption and more concentrated wealth distribution under the delay scenario implies that welfare is significantly lower than in the passive adjust scenario. Using a consumption equivalent welfare metric we find that the average difference in steady state welfare across scenarios would be equivalent to permanently increasing consumption to each agent in the delay scenario economy by 6 percent while leaving their amount of leisure unchanged. We interpret this differential as the permanent welfare gain from stabilizing public debt at its pre-crisis level. A breakup of the welfare comparison of steady states by wealth deciles, shown in Figure 5, suggests that all agents up to the 7th deciles of the wealth distribution would be better off under fiscal consolidation.

What are the effects of alternative fiscal consolidation plans?

Capital and Output. The smaller size of government in the two active adjust scenario relative to the passive one translates into higher capital stocks and higher output, increasing the gap with the delay scenario. Regarding the tax reform, the comparison between the two active adjust scenarios reveals that distributing the higher tax pressure on all taxes, including consumption taxes, lowers distortions and results in a higher capital stock and in a growth friendlier consolidation: The difference in the output level between the delay and active (1) adjust scenario stands at 17.7 percent—while this difference is 17.1 and 15.7 percent for the active (2) adjust and passive adjust scenarios respectively.

Consumption and Welfare. While all adjust scenarios reveal a significant difference in long-run per-capita consumption and welfare with respect to postponing fiscal consolidation, the relative performance among them also favors a smaller size of government and a balanced tax reform. The difference in per-capita consumption with the delay scenario is 3.5, 5.8 and 5.4 percent respectively for the passive, active (1) and active (2) adjustment scenarios. The policy mix under the active (1) adjust scenario also ranks the best in terms of welfare, with the welfare differential with respect to the delay scenario being more than 7 percent of lifetime consumption.

Overall Welfare Cost of Delaying Fiscal Consolidation

In the long-run the average welfare in the adjust scenario is higher than in the delay scenario by 6.7 percent of lifetime consumption. However, along the transition to the new steady state the adjust scenario is characterized by a costly fiscal adjustment that entails a lower path for per capita consumption, so it might not be necessarily true that an adjustment is optimal.Conclusions

To assess the overall welfare ranking of the alternative fiscal paths, we extend the analysis of section III.A. by computing, for the delay and adjust scenarios, the average expected discounted lifetime utility starting in 2011. We find that even taking into account the costs along the transition, the adjust scenario entails an average welfare gain for the economy. The infinite horizon welfare comparison suggests that consumption under the delay scenario should be raised by 0.8 percent for all agents in the economy in all periods to attain the same average utility than under the adjust scenario (while leaving leisure unchanged). A breakup of this result by wealth deciles (see Figure 9) suggests that, as in the long-run comparison, the wealthiest decile of the population is worse off under the adjust scenario. Differently from the steady state comparison, however, the first four deciles also face welfare losses in the adjust scenario.

A few elements suggest that the average welfare gain reported (0.8 percent in consumptionequivalent terms) can be considered a lower bound. First, the calibrated subjective discount factor from the model used to compute the present value of the utility paths entails a yearly discount rate of about 9.9 percent.20 With such a high discount rate, the long-run benefits from the delay scenario are heavily discounted. Using a discount rate of 3 percent, the one used by CBO for calculating the present value of future streams of revenues and outlays of the government’s trust funds, would imply a consumption-equivalent welfare gain of 5.9 percent (instead of 0.8 percent). Second, the model we are using has infinitely lived agents, so we are not explicitly accounting for the distribution of costs and benefits across generations.

We compare the macroeconomic and welfare effects of failing to address the fiscal imbalances in the U.S. for an extended period with those of reducing federal debt to its precrisis level and find that the stakes are quite high. Our model simulations suggest that the continuous rise in federal debt implied by current policies would have sizeable effects on the economy, even under certainty that the federal debt will be fully repaid. The model predicts that the mounting debt ratio would increase the cost of borrowing and crowd out private capital from productive activities, acting as a significant drag on growth. Compared to stabilizing federal debt at its pre-crisis level, continuation of current policies for two decades would entail a permanent output loss of around 17 percent. The associated drop in per-capita consumption, combined with the worsening of wealth concentration that the model suggests, would cause a large average welfare loss in the long-run, equivalent to about 7 percent of lifetime consumption. Our results also suggest that reducing promptly the level of public debt is significantly more important for activity and welfare than differences in the size of government or the design of the tax reform. Accordingly, even under consensus on the desirability to increase primary spending in the medium-run, it would be preferable to start from a fiscal house in order.

The model adequately captures that the fiscal consolidation needed to reduce federal debt to its pre-crisis level would be very costly. Still, extending the welfare comparison to include also the transition period suggests that a fiscal consolidation would be on average beneficial. After taking into account the short-term costs, the average welfare gain from fiscal consolidation stands at 0.8 percent of lifetime consumption.

We argue that our welfare results can be interpreted as a lower bound. This is because, first, we abstract from default so our simulations ignore the potential effect of higher public debt on the risk premium. However, as the debt crisis in Europe has revealed, interest rates can soar quickly if investors lose confidence in the ability of a government to manage its fiscal policy. Considering this effect would have magnified the long-run welfare costs of stabilizing the debt ratio at a higher level. Second, the high discount rate we use in the computation of the present value of utility exacerbates the short-term costs. If we recomputed the overall welfare effects in our scenarios using a discount rate of 3 percent, the welfare gain from a consolidation would be 5.9 percent of lifetime utility, instead of 0.8 percent. An argument for considering a lower rate to compute the present value of welfare is that by assuming infinitely lived agents we are not attaching any weight to unborn agents that would be affected by the permanent costs of delaying the resolution of fiscal imbalances and do not enjoy the expansionary effects of the unsustainable policy along the transitional dynamics.

The results in this paper are not exempt from the perils inherent to any model-dependent analysis. In order to address features that we believe are crucial for the issue at hand, we needed to simplify the model on other dimensions. For example, given the current reliance of the U.S. on foreign financing, the closed economy assumption used in this paper may be questionable. However, we believe that it would also be problematic to assume that the world interest rate will remain unaffected if the U.S. continues to considerably increase its financing needs. Moreover and as mentioned before, the model ignores the effect of higher debt on the perceived probability of default, which would likely counteract the effect in our results from failing to incorporate the government’s access to foreign borrowing. The model also abstracts from nominal issues and real and nominal rigidities typically introduced in the new Keynesian models commonly used for policy analysis. However, we believe that while these features are particularly relevant for short-term cyclical considerations, they matter much less for the longer-term issues addressed in this paper.

Friday, October 21, 2011

The Case Against Global-Warming Skepticism

The Case Against Global-Warming Skepticism. By Richard A Muller

There were good reasons for doubt, until now.

http://online.wsj.com/article/SB10001424052970204422404576594872796327348.html

WSJ, Oct 21, 2011

Are you a global warming skeptic? There are plenty of good reasons why you might be.

As many as 757 stations in the United States recorded net surface-temperature cooling over the past century. Many are concentrated in the southeast, where some people attribute tornadoes and hurricanes to warming.

The temperature-station quality is largely awful. The most important stations in the U.S. are included in the Department of Energy's Historical Climatology Network. A careful survey of these stations by a team led by meteorologist Anthony Watts showed that 70% of these stations have such poor siting that, by the U.S. government's own measure, they result in temperature uncertainties of between two and five degrees Celsius or more. We do not know how much worse are the stations in the developing world.

Using data from all these poor stations, the U.N.'s Intergovernmental Panel on Climate Change estimates an average global 0.64ºC temperature rise in the past 50 years, "most" of which the IPCC says is due to humans. Yet the margin of error for the stations is at least three times larger than the estimated warming.

We know that cities show anomalous warming, caused by energy use and building materials; asphalt, for instance, absorbs more sunlight than do trees. Tokyo's temperature rose about 2ºC in the last 50 years. Could that rise, and increases in other urban areas, have been unreasonably included in the global estimates? That warming may be real, but it has nothing to do with the greenhouse effect and can't be addressed by carbon dioxide reduction.

Moreover, the three major temperature analysis groups (the U.S.'s NASA and National Oceanic and Atmospheric Administration, and the U.K.'s Met Office and Climatic Research Unit) analyze only a small fraction of the available data, primarily from stations that have long records. There's a logic to that practice, but it could lead to selection bias. For instance, older stations were often built outside of cities but today are surrounded by buildings. These groups today use data from about 2,000 stations, down from roughly 6,000 in 1970, raising even more questions about their selections.

On top of that, stations have moved, instruments have changed and local environments have evolved. Analysis groups try to compensate for all this by homogenizing the data, though there are plenty of arguments to be had over how best to homogenize long-running data taken from around the world in varying conditions. These adjustments often result in corrections of several tenths of one degree Celsius, significant fractions of the warming attributed to humans.

And that's just the surface-temperature record. What about the rest? The number of named hurricanes has been on the rise for years, but that's in part a result of better detection technologies (satellites and buoys) that find storms in remote regions. The number of hurricanes hitting the U.S., even more intense Category 4 and 5 storms, has been gradually decreasing since 1850. The number of detected tornadoes has been increasing, possibly because radar technology has improved, but the number that touch down and cause damage has been decreasing. Meanwhile, the short-term variability in U.S. surface temperatures has been decreasing since 1800, suggesting a more stable climate.

Without good answers to all these complaints, global-warming skepticism seems sensible. But now let me explain why you should not be a skeptic, at least not any longer.

Over the last two years, the Berkeley Earth Surface Temperature Project has looked deeply at all the issues raised above. I chaired our group, which just submitted four detailed papers on our results to peer-reviewed journals. We have now posted these papers online at www.BerkeleyEarth.org to solicit even more scrutiny.

Our work covers only land temperature—not the oceans—but that's where warming appears to be the greatest. Robert Rohde, our chief scientist, obtained more than 1.6 billion measurements from more than 39,000 temperature stations around the world. Many of the records were short in duration, and to use them Mr. Rohde and a team of esteemed scientists and statisticians developed a new analytical approach that let us incorporate fragments of records. By using data from virtually all the available stations, we avoided data-selection bias. Rather than try to correct for the discontinuities in the records, we simply sliced the records where the data cut off, thereby creating two records from one.

We discovered that about one-third of the world's temperature stations have recorded cooling temperatures, and about two-thirds have recorded warming. The two-to-one ratio reflects global warming. The changes at the locations that showed warming were typically between 1-2ºC, much greater than the IPCC's average of 0.64ºC.

To study urban-heating bias in temperature records, we used satellite determinations that subdivided the world into urban and rural areas. We then conducted a temperature analysis based solely on "very rural" locations, distant from urban ones. The result showed a temperature increase similar to that found by other groups. Only 0.5% of the globe is urbanized, so it makes sense that even a 2ºC rise in urban regions would contribute negligibly to the global average.

What about poor station quality? Again, our statistical methods allowed us to analyze the U.S. temperature record separately for stations with good or acceptable rankings, and those with poor rankings (the U.S. is the only place in the world that ranks its temperature stations). Remarkably, the poorly ranked stations showed no greater temperature increases than the better ones. The mostly likely explanation is that while low-quality stations may give incorrect absolute temperatures, they still accurately track temperature changes.

When we began our study, we felt that skeptics had raised legitimate issues, and we didn't know what we'd find. Our results turned out to be close to those published by prior groups. We think that means that those groups had truly been very careful in their work, despite their inability to convince some skeptics of that. They managed to avoid bias in their data selection, homogenization and other corrections.

Global warming is real. Perhaps our results will help cool this portion of the climate debate. How much of the warming is due to humans and what will be the likely effects? We made no independent assessment of that.

Mr. Muller is a professor of physics at the University of California, Berkeley, and the author of "Physics for Future Presidents" (W.W. Norton & Co., 2008).

There were good reasons for doubt, until now.

http://online.wsj.com/article/SB10001424052970204422404576594872796327348.html

WSJ, Oct 21, 2011

Are you a global warming skeptic? There are plenty of good reasons why you might be.

As many as 757 stations in the United States recorded net surface-temperature cooling over the past century. Many are concentrated in the southeast, where some people attribute tornadoes and hurricanes to warming.

The temperature-station quality is largely awful. The most important stations in the U.S. are included in the Department of Energy's Historical Climatology Network. A careful survey of these stations by a team led by meteorologist Anthony Watts showed that 70% of these stations have such poor siting that, by the U.S. government's own measure, they result in temperature uncertainties of between two and five degrees Celsius or more. We do not know how much worse are the stations in the developing world.

Using data from all these poor stations, the U.N.'s Intergovernmental Panel on Climate Change estimates an average global 0.64ºC temperature rise in the past 50 years, "most" of which the IPCC says is due to humans. Yet the margin of error for the stations is at least three times larger than the estimated warming.

We know that cities show anomalous warming, caused by energy use and building materials; asphalt, for instance, absorbs more sunlight than do trees. Tokyo's temperature rose about 2ºC in the last 50 years. Could that rise, and increases in other urban areas, have been unreasonably included in the global estimates? That warming may be real, but it has nothing to do with the greenhouse effect and can't be addressed by carbon dioxide reduction.

Moreover, the three major temperature analysis groups (the U.S.'s NASA and National Oceanic and Atmospheric Administration, and the U.K.'s Met Office and Climatic Research Unit) analyze only a small fraction of the available data, primarily from stations that have long records. There's a logic to that practice, but it could lead to selection bias. For instance, older stations were often built outside of cities but today are surrounded by buildings. These groups today use data from about 2,000 stations, down from roughly 6,000 in 1970, raising even more questions about their selections.

On top of that, stations have moved, instruments have changed and local environments have evolved. Analysis groups try to compensate for all this by homogenizing the data, though there are plenty of arguments to be had over how best to homogenize long-running data taken from around the world in varying conditions. These adjustments often result in corrections of several tenths of one degree Celsius, significant fractions of the warming attributed to humans.

And that's just the surface-temperature record. What about the rest? The number of named hurricanes has been on the rise for years, but that's in part a result of better detection technologies (satellites and buoys) that find storms in remote regions. The number of hurricanes hitting the U.S., even more intense Category 4 and 5 storms, has been gradually decreasing since 1850. The number of detected tornadoes has been increasing, possibly because radar technology has improved, but the number that touch down and cause damage has been decreasing. Meanwhile, the short-term variability in U.S. surface temperatures has been decreasing since 1800, suggesting a more stable climate.

Without good answers to all these complaints, global-warming skepticism seems sensible. But now let me explain why you should not be a skeptic, at least not any longer.

Over the last two years, the Berkeley Earth Surface Temperature Project has looked deeply at all the issues raised above. I chaired our group, which just submitted four detailed papers on our results to peer-reviewed journals. We have now posted these papers online at www.BerkeleyEarth.org to solicit even more scrutiny.

Our work covers only land temperature—not the oceans—but that's where warming appears to be the greatest. Robert Rohde, our chief scientist, obtained more than 1.6 billion measurements from more than 39,000 temperature stations around the world. Many of the records were short in duration, and to use them Mr. Rohde and a team of esteemed scientists and statisticians developed a new analytical approach that let us incorporate fragments of records. By using data from virtually all the available stations, we avoided data-selection bias. Rather than try to correct for the discontinuities in the records, we simply sliced the records where the data cut off, thereby creating two records from one.

We discovered that about one-third of the world's temperature stations have recorded cooling temperatures, and about two-thirds have recorded warming. The two-to-one ratio reflects global warming. The changes at the locations that showed warming were typically between 1-2ºC, much greater than the IPCC's average of 0.64ºC.

To study urban-heating bias in temperature records, we used satellite determinations that subdivided the world into urban and rural areas. We then conducted a temperature analysis based solely on "very rural" locations, distant from urban ones. The result showed a temperature increase similar to that found by other groups. Only 0.5% of the globe is urbanized, so it makes sense that even a 2ºC rise in urban regions would contribute negligibly to the global average.

What about poor station quality? Again, our statistical methods allowed us to analyze the U.S. temperature record separately for stations with good or acceptable rankings, and those with poor rankings (the U.S. is the only place in the world that ranks its temperature stations). Remarkably, the poorly ranked stations showed no greater temperature increases than the better ones. The mostly likely explanation is that while low-quality stations may give incorrect absolute temperatures, they still accurately track temperature changes.

When we began our study, we felt that skeptics had raised legitimate issues, and we didn't know what we'd find. Our results turned out to be close to those published by prior groups. We think that means that those groups had truly been very careful in their work, despite their inability to convince some skeptics of that. They managed to avoid bias in their data selection, homogenization and other corrections.

Global warming is real. Perhaps our results will help cool this portion of the climate debate. How much of the warming is due to humans and what will be the likely effects? We made no independent assessment of that.

Mr. Muller is a professor of physics at the University of California, Berkeley, and the author of "Physics for Future Presidents" (W.W. Norton & Co., 2008).

Sunday, January 23, 2011

Four of every 10 rows of U.S. corn now go for fuel, not food

Amber Waves of Ethanol. WSJ Editorial

Four of every 10 rows of U.S. corn now go for fuel, not food.

WSJ, Jan 22, 2011

http://online.wsj.com/article/SB10001424052748703396604576088010481315914.html

The global economy is getting back on its feet, but so too is an old enemy: food inflation. The United Nations benchmark index hit a record high last month, raising fears of shortages and higher prices that will hit poor countries hardest. So why is the United States, one of the world's biggest agricultural exporters, devoting more and more of its corn crop to . . . ethanol?

The nearby chart, based on data from the Department of Agriculture, shows the remarkable trend over a decade. In 2001, only 7% of U.S. corn went for ethanol, or about 707 million bushels. By 2010, the ethanol share was 39.4%, or nearly five billion bushels out of total U.S. production of 12.45 billion bushels. Four of every 10 rows of corn now go to produce fuel for American cars or trucks, not food or feed.

This trend is the deliberate result of policies designed to subsidize ethanol. Note the surge in the middle of the last decade when Congress began to legislate renewable fuel mandates and many states banned MTBE, which had competed with ethanol but ran afoul of the green and corn lobbies.

This carve out of nearly half of the U.S. corn corp to fuel is increasing even as global food supply is struggling to meet rising demand. U.S. farmers account for about 39% of global corn production and about 16% of that crop is exported, so U.S. corn stocks can influence the world price. Chicago Board of Trade corn March futures recently hit 30-month highs of $6.67 a bushel, up from $4 a bushel a year ago.

Demand from developing nations like China is also playing a role in rising prices, and in our view so is the loose monetary policy of the U.S. Federal Reserve that has increased the price of nearly all commodities traded in dollars.

But reduced corn food supply undoubtedly matters. About 40% of U.S. corn production is used to produce feed for animals. As corn prices rise, beef, poultry and other prices rise, too. The price squeeze has already contributed to the bankruptcy of companies like Texas-based Pilgrim's Pride Corp. and Delaware-based poultry maker Townsends Inc. over the past few years.

This damage coincides with a growing consensus that ethanol achieves none of its alleged policy goals. Ethanol supporters claim the biofuel reduces U.S. dependence on foreign oil and provides a cleaner source of energy. But Cornell University scientist David Pimentel calculates that if the entire U.S. corn crop were devoted to ethanol production, it would satisfy only 4% of U.S. oil consumption.

The Environmental Protection Agency has found that ethanol production has a minimal to negative impact on the environment. Even Al Gore, once an ethanol evangelist, now says his support had more to do with Presidential politics in Iowa and admits the fuel provides little or no environmental gain.